Shader Texture Mapping and Interleaved Arrays

Work continues on the new Rendering System for the Vortex Engine.

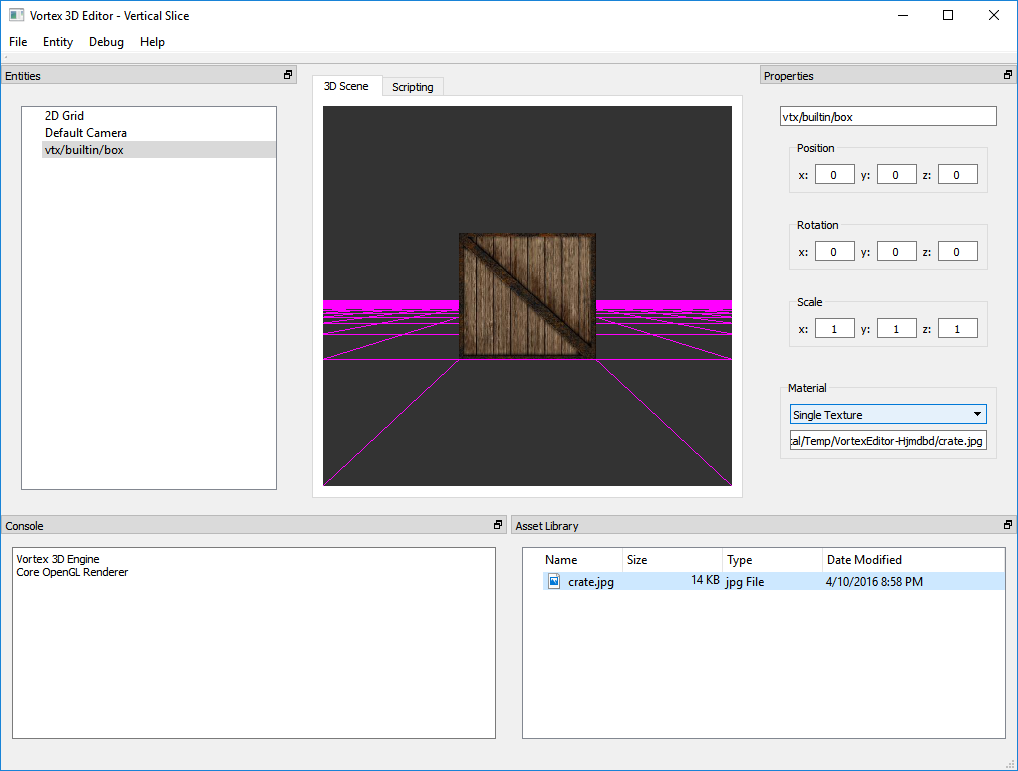

This week was all about implementing basic texture mapping using GL Core. The following image shows our familiar box, only this time, it’s being textured from a shader.

A number of changes had to go into the Renderer in order to perform texture mapping. These touched almost all the layers of the Engine and Editor.

- First, I wrote a “Single Texture” shader in GLSL to perform the perspective transform of the Entity’s mesh, interpolate its UV texture coordinates and sample a texture.

- Second, I had to change the way the retained mesh works in order to be able to send texture coordinate data to the video card (more on this later).

- Finally, I had to modify the Editor UI to allow selecting which shader is to be used when rendering an Entity.

So, regarding how to submit mesh texture coordinate data to the video card, because in OpenGL Core we use Vertex Buffer Objects (VBOs), it was clear that UVs had to be sent (and retained) in GPU memory too.

There are two ways to achieve this in OpenGL. One way consists in creating several VBOs so that each buffer holds an attribute of the vertex. Position data is stored in its own buffer, texture coordinates are stored their own buffer, per-vertex colors take a third buffer and so on and so forth.

There’s nothing wrong with doing things this way and it definitely works, however, there is one consideration to take into account: when we scatter our vertex data into several buffers, then every frame the GPU will have to collect all this data at render time as part of vertex processing.

I am personally not a big fan of this approach. I prefer interleaving the data myself once and then sending it to the video card in a way that’s already prepared for rendering. OpenGL is pretty flexible in this regard and it lets you interleave all the data you need in any format you may choose.

I’ve chosen to store position data first, then texture coordinate data and, finally, color data (XYZUVRGBA). The retained mesh class will be responsible for tightly packing vertex data into this format.

Once data is copied over to video memory, setting up the vertex attrib pointers can be a little tricky. This is where interleaved arrays become a more challenging than separate attribute buffers. An error here will cause the video card to read garbage memory and possibly segfault in the GPU. This is not a good idea. I’ve seen errors like this bring down entire operating systems and it’s not a pretty picture.

A sheet of paper will help calculate the byte offsets. It’s important to write down the logic and then manually test it using pen and paper. The video driver must never read outside the interleaved array.

Once ready, OpenGL will take care of feeding the vertex data into our shader inputs, where we will interpolate the texture coordinates and successfully sample the bound texture, as shown in the image above.

Now that we have a working foundation where we can develop custom shaders for any Entity in the scene it’s time to start cleaning up resource management. Stay tuned for more!